Robots.txt File: What is it and How to Optimize it for SEO

The robots.txt file is implemented on your WordPress website to signal search engines to crawl the URLs, files, and types you want. Whether you’re a beginner or a seasoned WordPress user, understanding the purpose and proper usage of the robots.txt file is crucial.

If you can tell Google or other search engines what content to crawl, it can speed up indexing your most relevant content.

In this guide, we will mainly learn about:

👉 What is robots.txt file in WordPress?

👉 How to create robots.txt file in WordPress?

👉 How to Optimize robots.txt file in WordPress?

👉 How to Test robots.txt file?

So you can improve your WordPress website crawling and indexing.

What Is a Robots.txt file?

Adding a Robots.txt file to the WordPress website root directory is a common SEO practice instructing search engines on which pages to crawl and which to ignore. In general, web browsers search all the publicly available web pages of your website in a process known as crawling.

When you place a robots.txt file in your WordPress website’s root directory, search engines look for all the URLs specified within it to determine which to crawl. Here, one thing should be clear: the robots.txt file isn’t used for index or nonindex purposes. Rather, the robots.txt file is only helping search engine bots to crawl your web pages.

What Does Robots.txt Look Like?

We want to see what it looks like as we have already learned about the Robots.txt file. It’s a simple text file, it doesn’t matter how big a website is. Most website owners set a few rules based on their requirements.

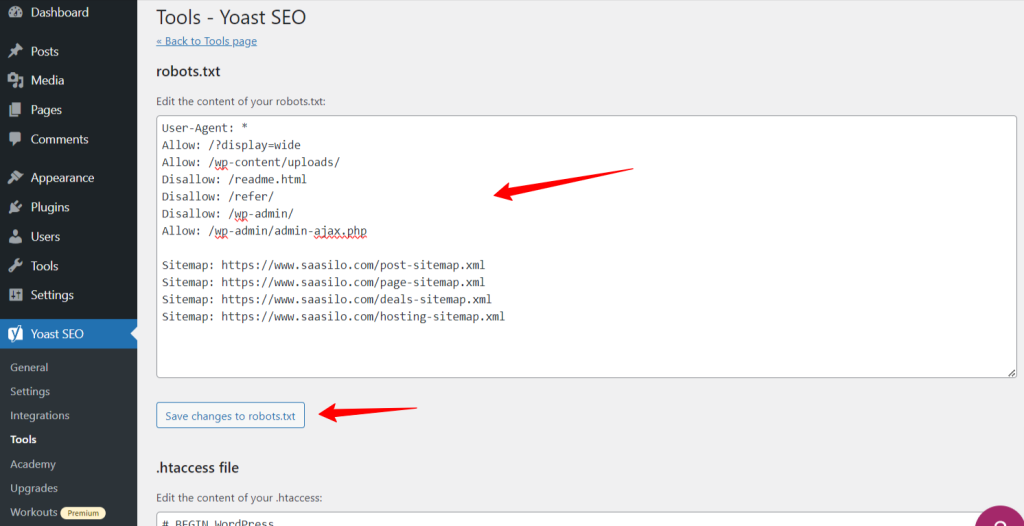

Here’s an example of a robots.txt file:

Allow: /?display=wide

Allow: /wp-content/uploads/

Disallow: /readme.html

Disallow: /refer/

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://www.saasilo.com/post-sitemap.xml

Sitemap: https://www.saasilo.com/page-sitemap.xml

Sitemap: https://www.saasilo.com/deals-sitemap.xml

Sitemap: https://www.saasilo.com/hosting-sitemap.xml

In the robots.txt file above, various rules dictate which URLs and file crawlers are allowed or disallowed to access within the website.

Here’s the breakdown of this robots.txt file:

User-Agent: Applies the following rules to all web crawlers.

Allow: /?display=wide: Allows crawlers to access URLs with ?display=wide in the query string.

Allow: /wp-content/uploads/: Encourage search engine bots to crawl all files and directories within /wp-content/uploads/.

Allow: /wp-admin/admin-ajax.php: Allows crawlers to access the admin-ajax.php file within the /wp-admin/ directory.

Disallow: /readme.html: Prevents crawlers from accessing the readme.html file.

Disallow: /refer/: Blocks crawlers from accessing the /refer/ directory and all its contents.

Disallow: /wp-admin/: Prevent crawlers from accessing the /wp-admin/ directory and its contents.

Allow: /wp-admin/admin-ajax.php: Allows crawlers to access the admin-ajax.php file within the /wp-admin/ directory.

Sitemap: Lastly, all the URLs linked with the sitemap are available for crawling by search bots.

Do You Need a Robots.txt File for Your WordPress Site?

Now, we’ll learn the importance of a well-configured robots.txt file for our WordPress website. Here are some important roles of robots.txt file for managing and optimizing your site’s performance and visibility.

➡️ Control Over Crawling: Search engine bots are smart enough to figure out all your website’s public pages or content. But, we want to keep some pages private as they contain administrative files like the plugin pages or system files of your WordPress website. And, using the robots.txt file will help you prevent the crawling of these irrelevant pages.

➡️ Faster Indexing for SEO: As we’ve already said the robots.txt file doesn’t directly facilitate indexing of your web pages. However, the robots.txt file can still quicken the indexing process. This happens because you remove unnecessary and irrelevant pages from being crawled.

➡️ Need Less Bandwidth: Search engine bots don’t need to surf all over your website when you give them directions on which areas need to be crawled. It will lessen the usage of server resources and bandwidth. Which also enhances your overall website performance.

➡️ No Duplicate Content Issue: One unwanted common blunder of website owners is publishing duplicate content. It deludes search engine bots about which content should be indexed or not. Or, they will be misunderstood and the original content may get ranked lower or punished. Adding a robots.txt file can ensure that there won’t be any indexing of multiple versions of the same content.

➡️ Avoid Insecure Bots & Crawlers: You can easily restrict any spammy crawlers or search bots in a robots.txt file. It will enhance the security of your WordPress website. Further, you can also disallow the admin pages from being crawled.

How to Create a Robots.txt File in WordPress?

Now that we understand the importance of adding a robots.txt file to our WordPress website, the next step is learning how to create one. With that in mind, we’ll show you two methods on how to create a robots.txt file in your WordPress website.

Method 1: Create a Robots.txt File Using Plugin

The easiest way to create and edit the robots.txt file is by using a plugin. Go to your WordPress dashboard, and follow Plugins > Add New. Now, install and activate an SEO plugin like Yoast SEO or Rank Math.

Let’s see their way:

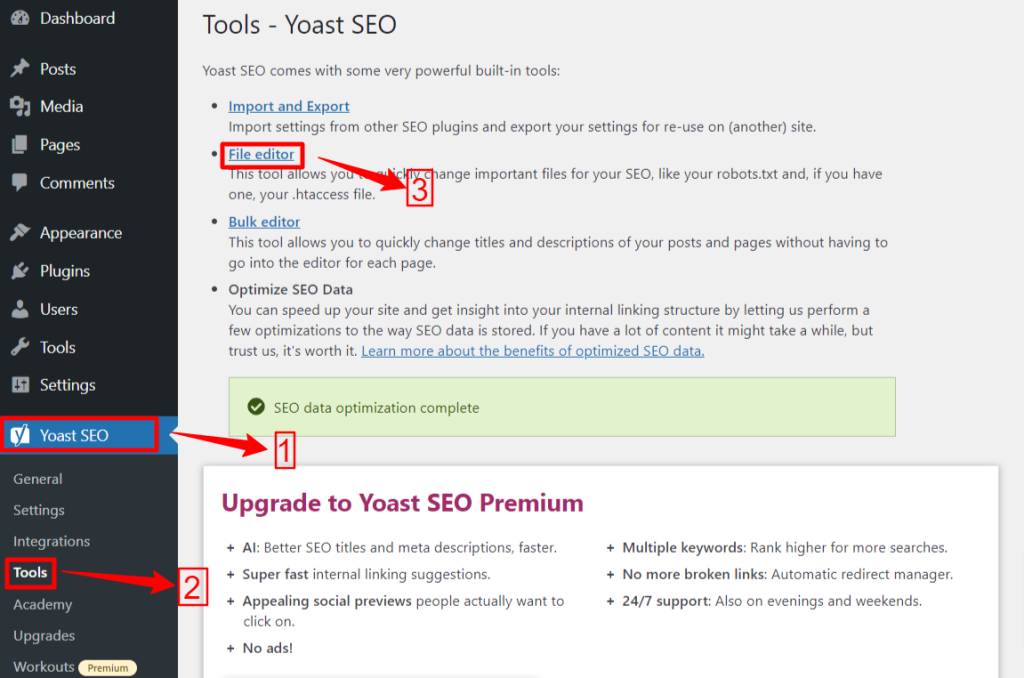

🟢 Yoast SEO:

If you’re using Yoast SEO, from your WP admin area navigate to the Yoast SEO > Tools and click File Editor, and then “Create Robots.txt file” if you don’t have any robots.txt file. Next, you will move to the editor screen.

After editing or changing your robots.txt file hit the “Save changes to robots.txt”. That’s it.

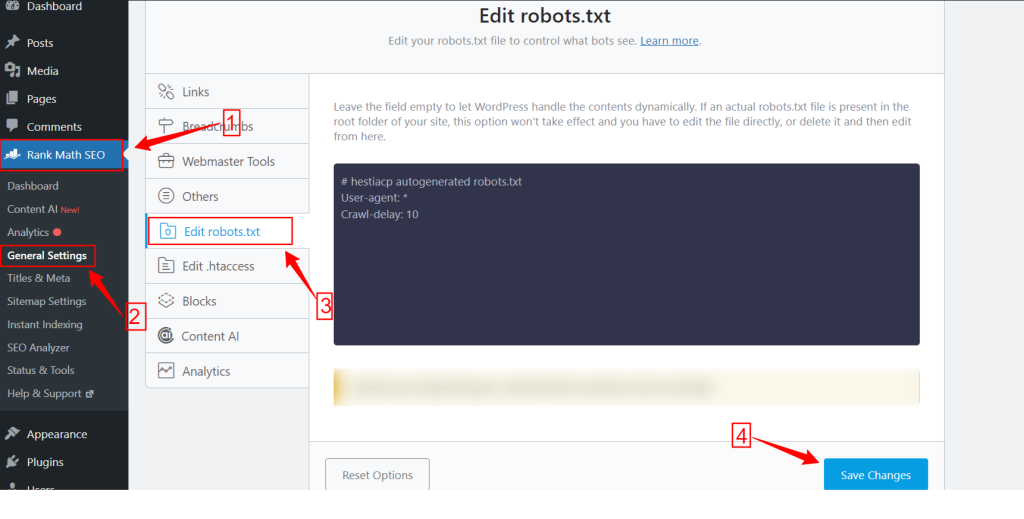

🟢 Rank Math:

While using the Rank Math plugin, go to the Rank Math dashboard within your WordPress dashboard and click General Settings. Then, select the Edit robots.txt tab. Make your changes and edit on the robots.txt screen and hit the Save Changes button once you are done.

NB: You need to delete the default robots.txt file from the website’s root folder using the FTP client.

Method 2: Create Robots.txt File Manually Using FTP

The manual process of adding a robots.txt file requires you to access the WordPress hosting using an FTP client like FileZilla, WinSCP, and Cyberduck. FTP login information is available in cPanel.

As you know, the robots.txt file needs to be uploaded to the root folder of the website, so you can’t put it in any subdirectory.

You can also create a robots.txt file in the FTP client if there isn’t any. From your local computer, open a simple text editor like Notepad and enter the below directives:

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Save this file as robots.txt. Now, within the FTP client, look for the “File Upload” option and upload the robots.txt file to the website’s root folder.

Now, to open your newly created robots.txt file or previous one, right-click on it and select the “Edit” option. Do your changes and edit, then hit the Save option. Now, check your robots.txt file by adding the robots.txt file after your domain name. Such as, yourdomain.com/robots.txt.

How to Optimize Your WordPress robots.txt for SEO

Now that we know how to create and edit a robots.txt file on a WordPress website, let’s focus on the best practices to optimize the robots.txt file for your WordPress site.

✅ Create a Concise File

Adding a simple robots.txt file helps the crawler’s bots understand your directives easily, so they consume fewer resources. Plus, you can avoid any unwanted risks that arise from conflicts or errors.

✅ Exclude WP Directories & Files

We shouldn’t let certain files and directories be crawled by the search bots since these areas contain essential and sensitive information. When you exclude these links from the robots.txt file search bots won’t visit these areas of your website. Therefore, you can ensure optimum usage of crawl resources.

Let’s get to know some of the WP directories and files:

/wp-admin/ and /wp-includes/: These directories can give access to the admin area and core files that make the entire WordPress website work which can be a security threat. If search bots crawl these areas, it can be a crucial security threat. So, we have to up Disallow directives.

Disallow: /wp-includes/

Login Page: Another important security measure is to deindex the login page. To stop crawling:

readme.html: The readme page can reveal crucial information like the current WordPress version of your website to potential hackers. So consider deindexing it as well.

Tag and Category Pages: These pages waste crawl budgets due to their irrelevance to search queries and confuse search engines with duplicate content.

Disallow: /category/

Search Results: The above reason also applies to the internal search results pages.

Referral Links: Affiliate programs produce referral links that don’t need to be crawled as well.

✅ Insert Sitemap Link

Generally, WordPress users submit their sitemap to the Google Search Console or Bing Webmaster Tools accounts for tracking analytics and performance data. However, adding a sitemap to the robots.txt file can assist crawlers in discovering the content of your website.

Sitemap will help your website index faster as search engines can easily navigate your website structure and hierarchy. You can define the pages you want to prioritize for crawling. Another good thing is that a sitemap can guide crawlers to the latest updates on your website.

✅ Effective Use of Wildcards

Wildcards are your effective weapon for defining pattern-based rules that allow search engines to block or access a group of URLs or specific file types. That way, you don’t need to restrict several pages separately using much time.

Wildcards are specialized characters like Asterisk (*) and Dollar Sign ($). Let’s describe them:

Asterisk (*): The most common wildcard is the Asterisk (*), which applies rules broadly for a group or specific pattern-matched URLs or file types.

The above code disallows any search engine from crawling any part of the website.

Dollar Sign ($): This indicates the end of the URL, so you can allow or block any URLs that include a specific term like “pdf”.

Disallow: /*.jpg$

Disallow: /*.docx$

You can be more strategic by using wildcards. Like, you can block or allow:

Specific directories: Will block any URLs that start with /private/, including any files or subdirectories within it.

Disallow: /private/

URLs with specific parameters: Will block all URLs that contain the query parameter sort, such as /category/?pdf=asc.

Disallow: /*.pdf$

Specific patterns: Will block all URLs that include the word “checkout”, such as /product/checkout or /cart/checkout.

Disallow: /*checkout

Allowing Specific Files or Directories within Blocked Directories: Will block all URLs under /wp-admin/ except for admin-ajax.php

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

✅ Use Comments

To enhance the readability and clarity of your robots.txt file you can add comments. This is done by using a hash (#) sign at the beginning of the line you want to add as a comment.

✅ Monitor Crawling Behavior

Check if there are any crawling and indexing issues after submitting the robots.txt file using search engine tools like Google Search Console or Bing Webmaster Tools.

✅ Specify Rules for Search Bots

Some experts also stated that one effective strategy is creating different rules for different search engine bots in robots.txt files. Specifying how each bot will access and crawl your website will help you build your content strategy and optimize performance.

✅ Consider Mobile and AMP Versions

Similarly, you can change and adjust the directives of your robots.txt file for mobile and AMP versions.

✅ Update your Robots.txt file

Although, creating a robots.txt file is your task when start to build your WordPress website. However, you have to frequently look for any changes in your website’s structure and search engine algorithm updates so that you can update the robots.txt file.

✅ Don’t Make Experiment

The robots.txt file is a part of the WordPress website’s root directory. Hence, you shouldn’t make any experiments with it. For any confusion, take expert help or keep a simple robots.txt file.

✅ Final Check

Before uploading or editing the robots.txt file, you must ensure you have given a final check.

How to Test Your Robots.txt File

Up to this point, you’ve learned how to edit and optimize the robots.txt file on your WordPress website. However, this knowledge isn’t complete without understanding how to test it. Testing is crucial to ensure that search engine bots are crawling your site accurately.

One primary method is using the robots.txt testing tool in Google Search Console. This tool verifies whether Google is crawling your website according to your specified directives.

Now, if your website is added to the Google Search Console, log in to it. Select your website under “Please select a property”. The tool will start to fetch your website’s robots.txt file.

After verifying, the robots.txt testing tool will mention all the errors and syntax warnings. So, you will be left with fixing them and submitting the robots.txt file again.

Wrap Up!

Understanding and optimizing your robots.txt file is a crucial part of technical SEO and effective website management. We hope this comprehensive guide on how to optimize your WordPress robots.txt for SEO.

Now, you can easily configure which parts of your website should be crawled by search engines. Overall, you will see an improvement in the site’s indexing efficiency and overall performance.